Apple: AI With Privacy & Security, A Unique Combination (NASDAQ:AAPL)

Financeozgurdonmaz

I rate Apple (NASDAQ:AAPL) as a buy as the company is establishing a potential new cycle in upgrades of its different devices with the new “Apple Intelligence” opening new opportunities for revenue growth in the next few years. Privacy and security are two of the main pillars set by Tim Cook’s leadership since he took over as CEO in 2011 and are frequently overlooked by several analysts. As AI becomes more relevant and mostly a commodity on most of our devices, people might be more aware of how tech companies are using their data for their own machine learning and generative AI. I do not know which will be the final outcomes—negatives and positives—of the AI embedded in different aspects of our lives and how those outcomes could impact the global economy, politics, culture, etc. Under these uncertainties, I think that the security and privacy features of Apple will become a coveted factor in the long term, which, by itself, might reinforce even more of its already strong competitive advantages. In my previous article written in February 2024, I’ve found an intrinsic value of around $212 per share. Now I need to make some adjustments.

Context

The markets were expecting big news about AI at the last WWDC 2024, and Apple pleased most of these big expectations by launching the new Apple Intelligence, which is another way to name the AI for Apple. This AI offered by Apple is comprised of multiple highly capable generative models that are mostly specialized in the user’s everyday tasks while adapting on the fly to their current activity.

We’ve seen at the conference that most of today’s tasks are associated with writing and refining texts, prioritizing and summarizing notifications, creating playful images for conversations with family and friends, and taking in-app actions to simplify interactions across apps. However, there are some analysts who still think that Apple is behind in AI, while others do not fully understand the integration with OpenAI and Apple’s relationship with Google. Elon Musk criticized Apple’s partnership with OpenAI, saying that he would block Apple’s devices without understanding that partnership, in our opinion.

I noticed that Apple’s private security policy might give us answers to understand why Apple needed to take a completely different approach to its AI development compared to what its main peers were doing: collecting all the personal data of their users to use them as input for their AI.

Privacy and security: one pillar of Tim Cook’s successful leadership in the last decade

Since Tim Cook took over as CEO of Apple in 2011, he has set six pillars over which his leadership will thrive in the next few years. I took this information from the book Tim Cook: The Genius Who Took Apple to the Next Level, written by Leander Kahney:

- Accessibility: Apple considers accessibility a fundamental human right, and technology should be accessible to everybody in the world.

- Education: Apple considers education a fundamental human right, and high-quality education should be available for everybody in the world.

- Inclusion and Diversity: Apple considers that diverse teams are what make innovation possible.

- Environment: Apple boosts environmental responsibility in the design and manufacturing of its products.

- Privacy and Security: Apple considers privacy a fundamental human right. Every Apple product is designed from scratch to protect the privacy and security of each user.

- Responsibility of suppliers: Apple educates and empowers its different suppliers within its supply chain, while helping in the conservation of the most precious environmental resources.

When we think about Tim Cook’s successes in the last decade, we should think of these six pillars that drove his most important decisions. As you may notice, Cook wanted to reinforce the company’s mission, which is mostly associated with the brand. In Apple’s user’s view, the brand represents status, an ecosystem, Steve Jobs’s vision, etc.

Over the years, Tim Cook has learned that users also associate another factor with Apple’s brand: privacy and security. This means that Apple was not using all the data of each user while storing it in the cloud, as is done by other big tech companies. In this sense, Apple needed to think outside the box to further develop its AI systems without using user data.

In the last WWDC, Apple showed that the company is using its own silicon to run the new AI features, but there were no comments about how Apple trained those models, particularly if it was not using its users’ data. In this sense, we know how Tim Cook has dealt with the entire Apple’s operations and manufacturing, knowing that he is a genius at handling the company’s supply chain management through outsourcing, so it should not be a surprise that Cook would seek to establish partnerships, mostly with unexpected partners, for the development of the Apple Intelligence.

Apple’s partnerships with Google and OpenAI

I was surprised when I found information that Apple had been working on Google’s and Amazon’s cloud services to store data for its products for years. In the particular case of Apple Intelligence, Apple was working for months using Google’s data centers to train its new AI models, and Apple just took the information to feed its own AI model. Most of the impressive features of AI models should be at least partly managed in massive data centers, from which companies like Google have spent many years building them.

In my view, it’s a brilliant strategy, as Tim Cook did not have to invest a huge amount of money in data centers while not breaking the promise of one of its main commitments or pillars, privacy and security. In this sense, Apple user data was never used to train Apple intelligence, and private cloud computing (PCC) was not used for that purpose either.

In the last WWDC, Apple talked about how its AI would be mainly on-device, and eventually, when the user needs more complex requirements, the user would have the alternative of using the PCC, which is a cloud designed specifically for private AI processing. This means that the personal user data sent to the PCC is not accessible to anyone, not even Apple. Furthermore, PCC deletes the user’s data after fulfilling the request, while no user data is stored in any form after the response is returned.

This partnership has been documented and audited, while it is mentioned that Apple’s AI models were trained using a combination of methods, including Google’s Tensor Processing Units (TPUs) and both cloud and on-premise GPUs. TPUs are chips designed for artificial intelligence that are rented by Google through its cloud service as an alternative to Nvidia graphics processing units.

In regard to OpenAI, it was mentioned in the last WWDC that there will be an integration with ChatGPT. The user has the option to use ChatGPT or not since the iPhone will ask him first. If the user wants to use ChatGPT, OpenAI will not have access to all the user’s data on the device but only to the data directly related to his requirements. This is thanks to the Secure Enclave, which is a secure subsystem integrated into Apple to offer an extra layer of security.

Another protection related to the ChatGPT integration is the agreement with Apple itself:

Privacy protections are built in when accessing ChatGPT within Siri and Writing Tools—requests are not stored by OpenAI, and users’ IP addresses are obscured. Users can also choose to connect their ChatGPT account, which means their data preferences will apply under ChatGPT’s policies.

Thus, even when ChatGPT has very limited access to the user’s data, that information will not be stored by OpenAI, and users’ IP addresses will be obscured. There is a very important difference between connecting with ChatGPT while using Apple Intelligence and connecting with ChatGPT directly through a ChatGPT account. The user’s data is way more protected using the first choice; as such, those analysts who think that both ways are the same are wrong.

Apple is not behind in AI; it’s going at its own pace

With all this information that I presented, it’s clear that Apple could not develop its AI models like Gemini or ChatGPT since both models do not consider the privacy and security factor, in our opinion, which is one of the main pillars of Tim Cook’s leadership in the last decade. We know that Apple devices that would enjoy the new AI features will be the A17 PRO, M1, M2, M3, M4, and, in the particular case of the iPhone, from 15 Pro on.

In my view, knowing how Tim Cook manages Apple’s operations, I think that Cook wants to see how much consumers use ChatGPT for their daily tasks, and this might be assessed with the first devices sold with the new AI system. If ChatGPT is used more frequently than expected, Apple would have the incentive to develop a new strategy to further develop its AI models for more complex requirements and reduce its reliance on OpenAI services.

On the contrary, if ChatGPT is not used frequently by users, Apple would know that its focus and energy should be oriented toward further developing its own AI models for daily tasks. This is one of the aspects not frequently appreciated by Apple detractors since Tim Cooks proves again that he is the most shareholder-oriented CEO among the big tech companies, making gradual investments to have a better idea of how to deploy Apple’s capital to deliver the maximum value for shareholders. I wrote an article in July 2023 about Apple’s intangibles and how unique they are, even comparing them with other big techs.

I think that other CEOs would have rushed into the AI race, investing big sums in data centers and training the AI models in those data centers with users’ data, breaking one of the key pillars of Apple’s intangibles: the protection of the user data.

In general, it is expected that when users try out most of the Apple AI features on their new devices, most of the work will be done on the device itself. More intensive tasks will be handled in the PCC, and as a last resource, the ChatGPT option will be offered to them. Now, we’ve got a better picture of why Apple needed to solve the problem associated with the AI models and the privacy and security of the user’s data.

Valuation

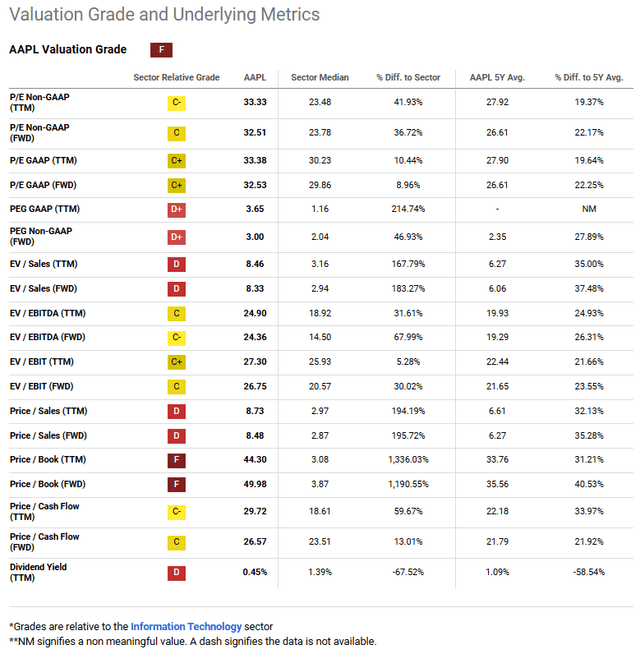

According to different multiples, we can see that Apple seems fairly valued:

SA

In the table above, we can see that most of the multiples indicate that the stock is fairly valued or expensive; for instance, the P/E in its different forms shows that Apple is fairly valued compared to the sector. However, a limitation of this ratio is that it’s assumed that Apple and its comparables have the same quality, which is not necessarily the case.

On the other hand, the PEG ratio is a multiple that calls the attention of “growth” investors, and it indicates that Apple is more expensive than its comparables. The main problem is that the ratio is not incorporating the growth opportunities associated with Vision Pro prospects.

Furthermore, I do not use every multiple of valuation that takes the sales as a denominator, such as EV/Sales or Price/Sales, particularly if we are assessing a high-quality company like Apple; the reason is that these multiples do not consider the net income or the FCF, which are way more important metrics to gauge the real performance of a high-quality company. So the fact that Apple is more expensive than its comparables using these ratios does not tell me that much.

The ratios that I consider the most important ones are graded C, C+ or C-, such as P/E, EV/EBIT, and P/Cash Flow, so according to these ratios, Apple is mostly fairly valued; the P/Cash Flow (FWD), which is a very important ratio in my view, is graded C, which indicates even a reasonable price. Considering that Apple has a very consistent generation of free cash flows each year, I would have expected that this multiple would be way more expensive than its comparables.

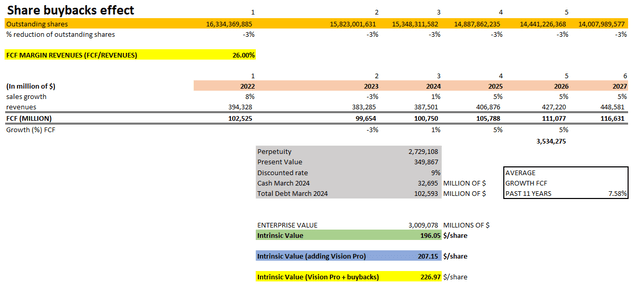

In my view, we need a method that incorporates Vision Pro’s growth prospects and the share buyback’s impact on Apple’s valuation, which is a very important factor that is mostly overlooked. As such, I propose a DCF model that will include both important factors to get a better approximation of Apple’s intrinsic value.

Assumptions

- Outstanding shares: 15,348,311,582 (projected for 2024)

- FCF margins: 26% (average of the last 10 years).

- Revenue growth: 1% for FY 2024, 5% for FY 2025, and 5% beyond 2025 (consensus made adjustments in the revenue growth considering the possibilities with Apple Intelligence).

- Cash as of March 2024: $32,695 million.

- Debt as of March 2024: $102,593 million.

- Discounted rate: 9%.

- FCF growth in perpetuity: 5.7% annual (CAGR FCF growth from 2013 to 2024: 7.58%).

- I use the Vision Pro’s assumptions made by BofA Securities analyst Wamsi Mohan and UBS analyst David Vogt.

Author

We should focus first in “green” Intrinsic Value, which does not include the Vision Pro and the Buybacks effect. To find the perpetuity, we used the formula:

Perpetuity = FCF 2027/(discounted rate – g).

where g = FCF growth in perpetuity, which was assumed to be 9% annual.

The FCFs are calculated by multiplying the revenues projected by the FCF margins assumed at 26%; in this way, I get the FCFs estimated for the next few years.

With perpetuity, we calculate the present value of all the FCFs beyond 2026. Then, we calculate the enterprise value using the following:

Enterprise Value = Present Value of FCF (from 2024 to 2026) + Perpetuity + Cash – Total Debt.

Finally, the intrinsic value is calculated by taking the enterprise value and dividing it by the outstanding number of shares. In this way, we could get $196.05 per share as an intrinsic value under the assumptions presented.

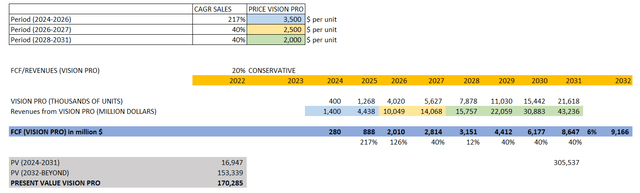

Now, I estimated the projections for the Vision Pro units sold, which are estimated to be 400 units in 2024 for the first year. This is the opposite of what Ming-Chi Kuo was estimating for 2024—around 800 units for 2024 while claiming that he needed to reduce his expectations to 400 units. I always took the most conservative number for my projections:

Author

It’s worth noting that I am reducing the price of the Vision Pro for newer versions in different periods, so I assume a FCF margin of 20% for the Vision Pro, which is lower than the 26% FCF margins of Apple’s overall sales. Being aware of how demanding Tim Cook is with Apple’s manufacturing efficiency, I think that is a reasonable FCF margin. Then, I calculate the present value of all the FCFs to be generated in the future to be added to the enterprise value previously calculated.

Thanks to the Vision Pro FCF generation over the years, we see that our intrinsic value has increased from $196 to $207.15 per share. Finally, I make a projection of the outstanding number of shares until 2027, considering a 3% rate of reduction in the share count. In my previous article, I’ve shown that Apple has been reducing its share count at that pace since its last split 4-to-1 in 2020. So, if I take the sum of the enterprise value plus the present value of the Vision Pro, dividing them by the share count in 2027, I’ll get an intrinsic value of $226.97. I would get an even higher intrinsic value if I took the share count in 2030, as I expect that the share buyback policy will be kept until that year, but I prefer to be more conservative.

I am assuming a discounted rate of 9%, so if the FED decided to reduce the interest rates, I would need to reduce my discounted rate, so that could boost even more the intrinsic value.

So, I consider that the current stock price is not expensive, particularly if the investor wants to keep his shares for the next few years. I have accumulated shares since 2020, when the stock markets were impacted by the COVID news, and then in 2022, when the FED started raising interest rates to control inflation. In the end, I had an average cost of $121 per share. I will keep my shares for the long term.

Risks

One of the main risks is associated with the regulatory issues in the EU and the US, mostly related to accusations of mistrust and privacy violations, as well as harmful competitive strategies. I think that the fact that Apple is accused of being a monopoly means that the company has done very well reinforcing its competitive advantages.

However, this could mean that Apple might need to reconfigure the way iPhones direct their users to use different apps and search engines. I think that the biggest risk is that Apple has to break its own ecosystem of privacy; nevertheless, Apple has very capable management under Tim Cook’s leadership to defend the main Apple’s interests, those related to the users, and those related to its shareholders.

In the end, we already know how Apple protects its users’ data, which is one very powerful reason to not break its ecosystem. I think that it’s in the best interest of regulators to not destroy the best benefits that Apple offers to society, which are privacy and security. It’s uncertain how these allegations and regulatory issues would end up impacting Apple’s business, but we know very well how Tim Cook adapts Apple to the new challenges successfully, so I feel very calmed with the management’s ability to handle all these challenges.

I’ve found an article written in April 2012 about the different regulatory issues impacting Apple at that time, such as factory conditions, antitrust, ecosystems, privacy, etc. Therefore, Apple is not facing regulatory issues for the first time, so the management has been able to handle these cases over the years properly.

Other risks are more associated with China, since there is no ChatGPT or any other western AI model available there; thus, Tim Cook would need to establish new partnerships there. There could be some difficulties in China, as I do not know if Apple would be allowed to have its AI models there, even if these models do not store data in any place. The important thing is that the Chinese regulators need to be convinced that Apple is not taking data from Chinese people to train its own AI models.

Given Tim Cook’s ability to reach new agreements and partnerships, I think that it’s feasible that he would be able to fulfill his goals in China. That’s why it’s very important to have high-quality management running the company, and Tim Cook has a solid track record of reaching very convenient agreements. For instance, the recent agreement with OpenAI does not involve any payment by Apple, which, of course, surprised several pundits, even those who are Tim Cook’s detractors.

Conclusion

I think that Apple has taken a very important step in its AI development according to its own requirements. In this article, you’ve seen that Apple could not take the same path in that development as that of its main peers like Google, Microsoft, or Amazon. The privacy and security of the user’s data might be one of the most coveted factors in the future, as AI will impact different aspects of our lives, and most people might seek that privacy and security as a way to protect and control their own data while avoiding the use of that data for other purposes.